By Garry Farrell, Managing Consultant

In today’s data-driven world, businesses often grapple with the challenge of managing and analysing vast amounts of transactional data. The sheer volume of data can lead to performance bottlenecks, long refresh times, and sometimes even dataset limits being exceeded. Recently, Altis embarked on a Power BI project that required handling millions of transactions efficiently. After evaluating various approaches and consulting with the client, a composite model that not only addressed these challenges but also enhanced the overall user experience was implemented.

The Challenge

The project involved generating reports from a large dataset comprising millions of transactions. The primary issues were:

- Performance: Loading all transactions at once resulted in slow report performance and delays upon opening the report.

- Data Refresh: Frequent dataset refreshes were time-consuming and impacted system performance and limited the refreshes to once a day.

- Dataset Limits: The sheer volume of data risked hitting the limits of the dataset size even though the large dataset storage format setting had been implemented.

The Solution: A Composite Model Approach

To tackle these challenges a Composite Model that leveraged both import and direct query modes for different table.

Here’s how it worked:

1. Aggregated Fact Table in Import Mode:

- An aggregated fact table summarized the transactional data. This table included key metrics and dimensions that were essential for high-level reporting.

- Using import mode aggregated data was loaded into the dataset ensuring fast access and quick report loading times.

- The aggregated data provided a high-level overview, which was sufficient for most reporting needs without overwhelming the system or causing delays for users.

- The dimension tables were also imported, as they typically have a manageable number of rows and no impact on performance or user experience.

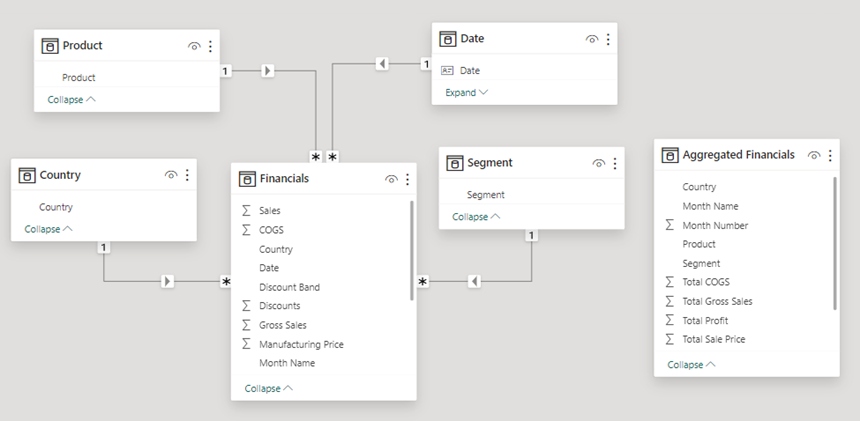

The composite model shows the aggregated fact table (import mode), dimension tables, and the Financials fact. The Financial fact uses direct query.

2. Drill Down and Drill Through Reports:

- To offer detailed insights, the solution employed drill down and drill through reports. Users could click on specific data points in the aggregated report to drill down into more detailed information.

- This approach ensured that users could explore data at a granular level without loading all transactions upfront.

3. Direct Query for Detailed Transactions:

- When users needed to see the detailed transactions, the drill through reports came into play. These reports used direct query mode to fetch data directly from the database.

- Direct query mode allowed the system to query the database in real time, fetching only the relevant transactions based on the filters applied by the user.

- This dynamic querying significantly reduced the load on the system, as only a subset of the data was retrieved, rather than the entire dataset.

Benefits of the Composite Model

The Composite Model offered several key benefits:

Improved Performance: By loading only aggregated data initially, the reports loaded quickly, enhancing the user experience. The system avoided the performance hit of loading millions of transactions at once.

Efficient Data Refresh: Since the aggregated fact table was updated periodically, the data refresh process was quicker and less resource intensive. Real-time data retrieval for detailed transactions ensured that users always had access to the most current information without the need for frequent full dataset refreshes.

Scalability: The solution was scalable, accommodating future growth in the volume of transactions. The direct query approach for detailed transactions ensured that even with increasing data, the system could handle user queries efficiently.

Avoiding Dataset Limits: By splitting the data management between import and direct query modes, the design effectively circumvented any dataset size limitations imposed by Power BI.

Implementation Insights

During the implementation, a few key considerations ensured the success of the composite model:

Efficient Aggregation: Careful design of the aggregated fact table was crucial. It needed to provide meaningful insights at a high-level while being small enough to load quickly.

Optimized Queries: The direct query reports were designed to execute optimized SQL queries. This minimized the load on the database and ensured fast response times for user queries.

User Training: Users were trained on how to use the drill down and drill through functionalities effectively. This training was essential to help them understand how to access detailed data without overwhelming the system.

Conclusion

Implementing a composite model for handling millions of transactions proved to be a game-changer for the client’s project. By leveraging the strengths of both import and direct query modes, we achieved a balance between performance and data accessibility. This approach not only solved the immediate challenges but also positioned us for future growth and scalability. If you’re facing similar issues with large datasets, consider exploring a composite model solution to optimize your data management strategy.

If you are interested in the above topic please Connect with Garry on LinkedIn or contact us via the website to find out more.